The physical portion of the installaton, in its modified form at the Open Gallery at 49 McCaul

Project year: 2013

Installation art (student group project)

Exhibited in Disrupting Undoing Salon (juried), April 1–5, 2013 at the Open Gallery, OCAD.

Exhibited in Disrupting Undoing Salon (juried), April 1–5, 2013 at the Open Gallery, OCAD.

Concept: Jan Derbyshire, Ambrose Li

Woodwork: Angela Punshon, Qi Chen

Motor–sprocket assembly: Angela Punshon

Electronics: Angela Punshon, Ambrose Li

Electronics: Angela Punshon, Ambrose Li

Hardware control: Angela Punshon, Ambrose Li

Copywriting: Ambrose Li, Angela Punshon, Jan Derbyshire

Copywriting: Ambrose Li, Angela Punshon, Jan Derbyshire

Graphic design: Ambrose Li

Website development: Ambrose Li

Website development: Ambrose Li

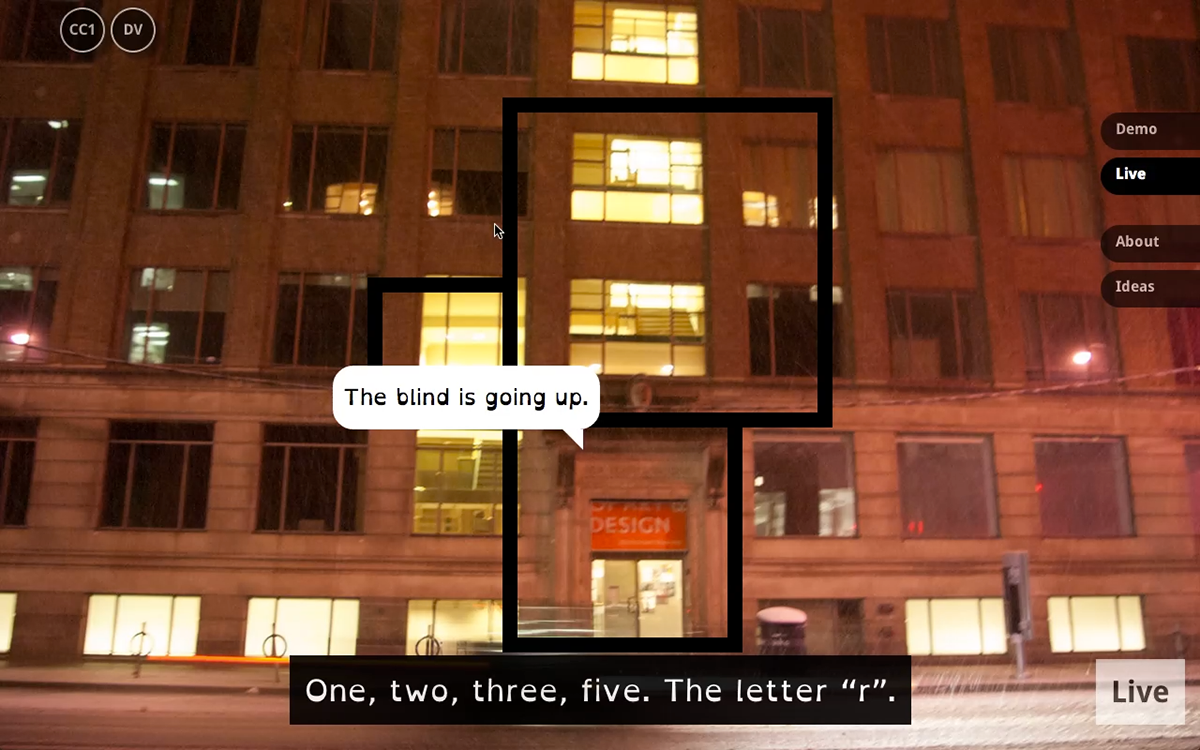

The online portion of the installation, featuring a reversal of experience where descriptions and captions were central to a video-free “live broadcast”

Blind Reading was an installation consisting of a motorized mechanism to move a set of window blinds according to a preprogrammed schedule derived from an encoded message, and a website that decodes this sequence of blind movements back into its original, unencoded form of an English sentence.

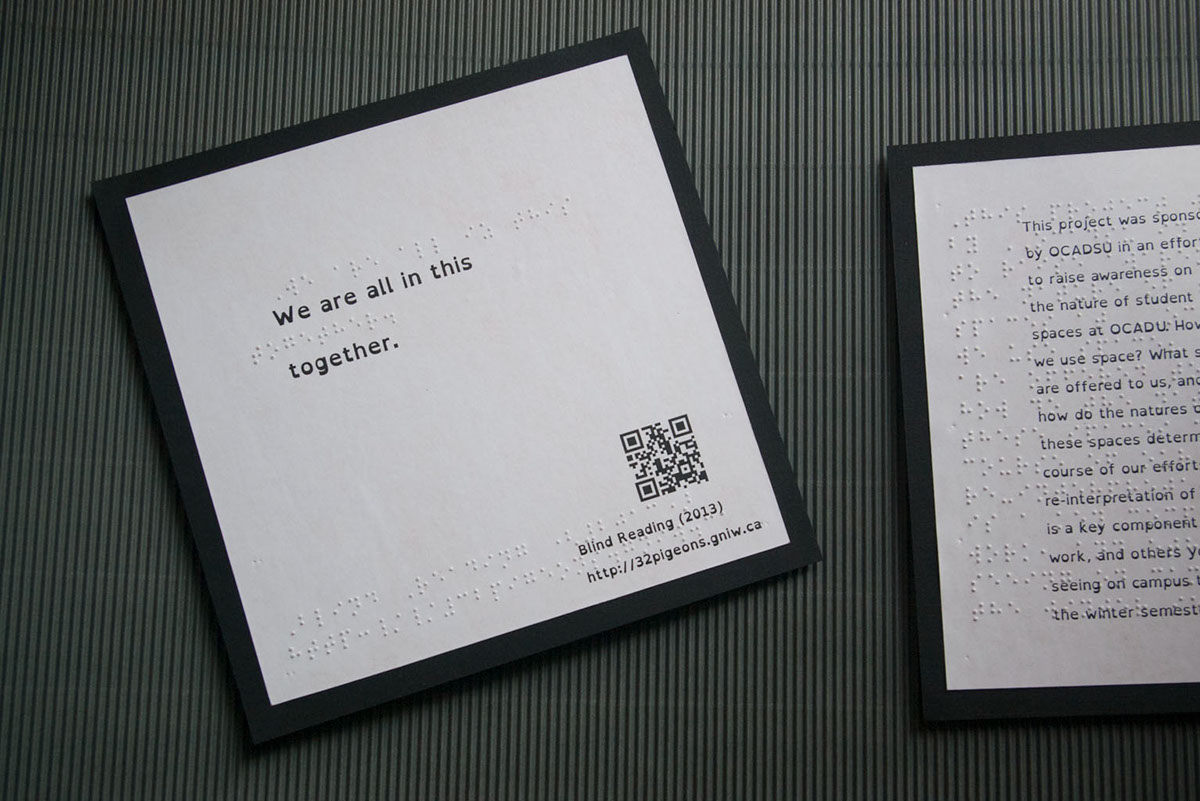

The specific encoding chosen was a serialized form of Braille where successive encoded Braille cells were set off by half-open window blinds. Because of the obscurity of the encoding, people might suspect something was being communicated but would not be able to make sense of the message. The physical portion of the installation thus speaks of the frustration of knowing something is being said but not knowing what it is: The viewer was in effect being “disabled.”

A QR code on an information panel directed the viewer to a website where the blind movements could be decoded, revealing the original message. The virtual portion of the installation thus acted, in a way, as an “assistive technology” for people who would normally not consider themselves to be disabled and in need of assistive technologies.

Originally conceived as a site-specific installation at the Inclusive Design Research Centre (IDRC)’s “Distributed Collaborative Laboratory” at 205 Richmond Street West, Blind Reading traces its roots to the desire to raise an awareness within OCAD of the existence of the IDRC and the Inclusive Design program.

The installation was first shown in its original form during OCAD’s Grad Open House on

March 15, 2013. It was subsequently shown in a modified form in OCAD’s Open Gallery at

49 McCaul Street as part of the Disrupting Undoing Salon from April 1–5, 2013.

The installation was first shown in its original form during OCAD’s Grad Open House on

March 15, 2013. It was subsequently shown in a modified form in OCAD’s Open Gallery at

49 McCaul Street as part of the Disrupting Undoing Salon from April 1–5, 2013.

The blinds were half open for 10 seconds at approximately 2:25, indicating that an encoded Braille cell had finished transmission. Each blind movement was followed by 10 seconds of delay, so between 0:00 and 2:25 the sequence of blind movements corresponded to the bits 1, 0, 0, 0, 0, 0, or a normal 6-dot Braille cell with just a single dot at position 1, which in turn corresponds to the English letter “a.”

Copy on the information panels was arranged to make each line of printed text correspond exactly to its Braille equivalent; i.e., the Braille layout was consciously decided to be a constraint to the typography. The Braille was consciously placed before the type to convey the idea that Braille need not be a “second-class citizen.”

In its original site-specific form, the motor was directly attached to the window blinds of the Distributed Collaborative Laboratory.

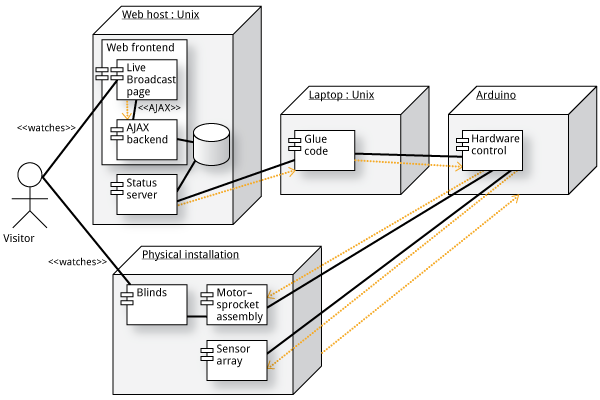

Appendix 1: Overview of the software system architecture

The software system comprised four separate but connected subsystems, which can be described in UML as four components in three deployment nodes; these were the hardware control code in the Arduino (written in Arduino-flavoured C++), the website (backend written in PHP and frontend written in HTML5, CSS3, and Javascript), and some glue on a laptop to tie the two together (written in Python).

The physical portion of the installation was controlled by code in the Arduino, which periodically sent out status messages to the serial port. Since serial communication is nonblocking on the Arduino, the two, combined, can function as a standalone installation if we do not need the messages to be decoded through a website.

In order for the decoding to happen, the glue code on the laptop relayed these status messages to the web backend using a simple text-based protocol. To avoid excessive network traffic, the set of status messages was pre-filtered.

At the web backend the status messages were interpreted to determine the current state of the window blinds, and the sequence of such states was decoded, if possible, into its original unencoded form. Both of these were saved to a JSON-encoded text file which served as a shared message store between the front- and backend.

The frontend consists of a web page and an AJAX callback, which took the JSON-encoded message in the shared message store and relayed it back to the Javascript code on the web page. The Javascript then interpreted this and used JQuery to modify the web page to display the “live broadcast.”

The visitor interacts with the installation by watching the physical installation and optionally watching the captions and descriptions provided by the Live Broadcast page. The physical installation requires the Arduino to work, but the code in the Arduino also, in turn, depends on the motor–sprocket assembly and the sensor array on the physical installation to work.

Appendix 2: The sensor array

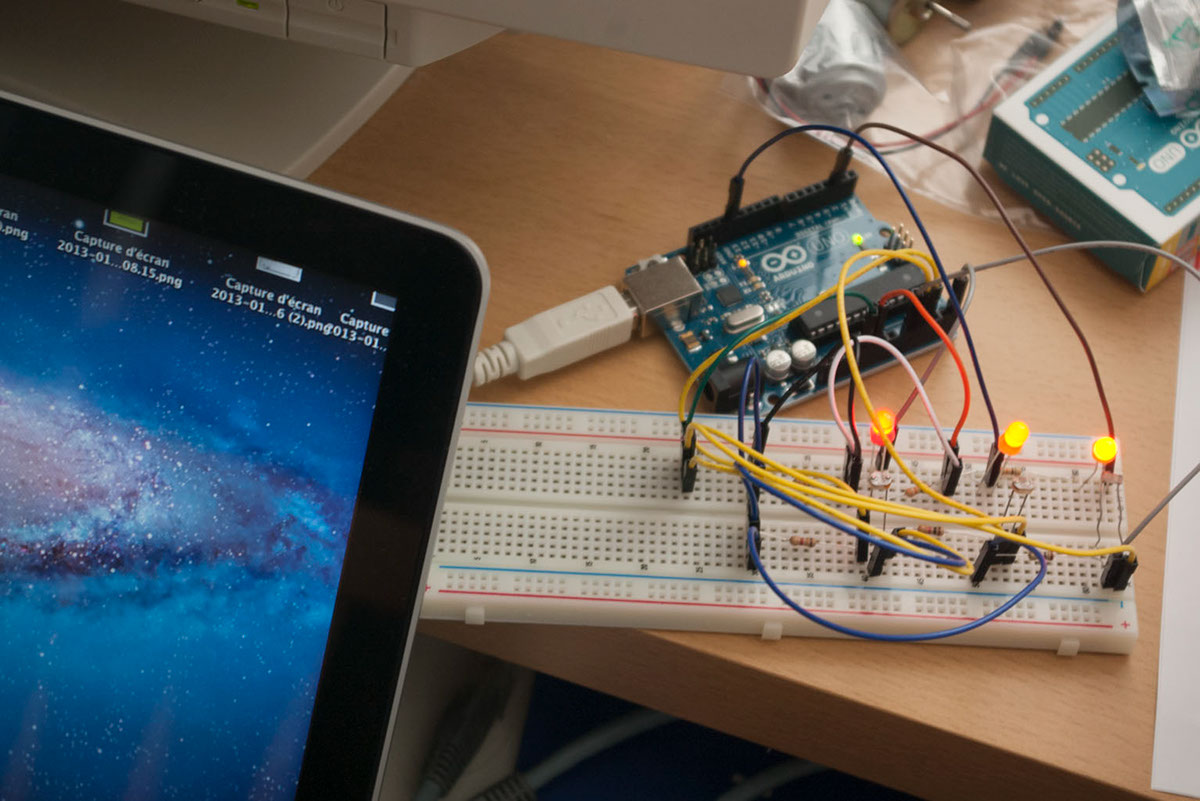

The primary means for the installation to detect the position of the blinds was a set of three sensors, each consisting of an LDR (light dependent resistor) paired with a LED (light emitting diode) blinking at a controlled, constant rate while the installation was running.

As the LDR is known to lack the ability to accurately measure ambient lighting conditions, the LED’s were designed to blink, which artificially introduced a difference in light levels near the LDR. The assumption was that if a blind was blocking the path between the LDR and the LED, the additional light from the LED should not reach the LED, and so the LDR should detect no difference between two successive reads; on the other hand, if the path between the LDR and the LED has not been blocked, the LDR should be able to detect increased light levels. The LDR–LED pair should thus be able to determine the presence or absence of blinds without knowing how the ambient lighting conditions would affect the LDR’s measurements.

A delay in the LED to respond was anticipated and so an approximately-100-millisecond delay had been inserted between flashing the LED’s to getting readings from the LDR’s.

In the modified version exhibited at 49 McCaul Street, the bottom sensor was replaced by a limit switch to improve sensor array accuracy.

Prototype of the sensor array design being tested off-site before being implemented at the original site at 205 Richmond Street West

Support and documentation:

External communications: Angela Punshon, Jan Derbyshire

Photography: Ambrose Li, Angela Punshon

Videography: Ambrose Li, Jan Derbyshire